Velocity, not headcount, is becoming the ultimate moat.

Generative AI is the star of every board‑deck, X or LinkedIn thread, but new telemetry from Anthropic suggests the real drama is happening below the headline. After poring over 500,000+ live interactions with its Claude models, Anthropic found that the most powerful version—Claude Code, a tool‑using "agent" optimised for building software—has been embraced unevenly. A handful of teams are already running laps while others are still stretching on the sidelines.

This pattern is straight out of the history books of General‑Purpose Technologies (GPTs). Electricity, the internet, the smartphone: each rewired the economy, but only after early adopters redesigned their workflows around the new capability. AI, it turns out, is following the same adoption S‑curve—just compressed into quarters instead of decades.

From Hype to Hard Data

Anthropic’s study compared behaviour on the vanilla Claude.ai chatbot with usage of Claude Code. Where the former is an all‑purpose conversationalist, the latter can chain tools, write and execute code, and return finished artefacts—think co‑pilot versus co‑builder.

- Sample size: 500 k+ real‑world prompts

- Timeframe: Q4 2024 – Q1 2025

- Focus: Identifying who uses which model, for what, and how deeply the AI is embedded in the workflow

Three findings jump off the spreadsheet.

1 — Startups Are Flooring the Accelerator

Startups account for roughly one‑third (≈33 %) of all Claude Code usage. Enterprises, despite their headcount advantage, show up in only 13–24 % of those conversations.

Why the gap? Brynjolfsson and McAfee’s classic HBR piece on GPTs argued that the real cost isn’t the tech; it’s the complementary investments—process redesign, up‑skilling, governance tweaks. Startups, unburdened by legacy ERPs and eight layers of sign‑off, treat those investments as Tuesday afternoon projects. Enterprises file a ticket and form a steering committee.

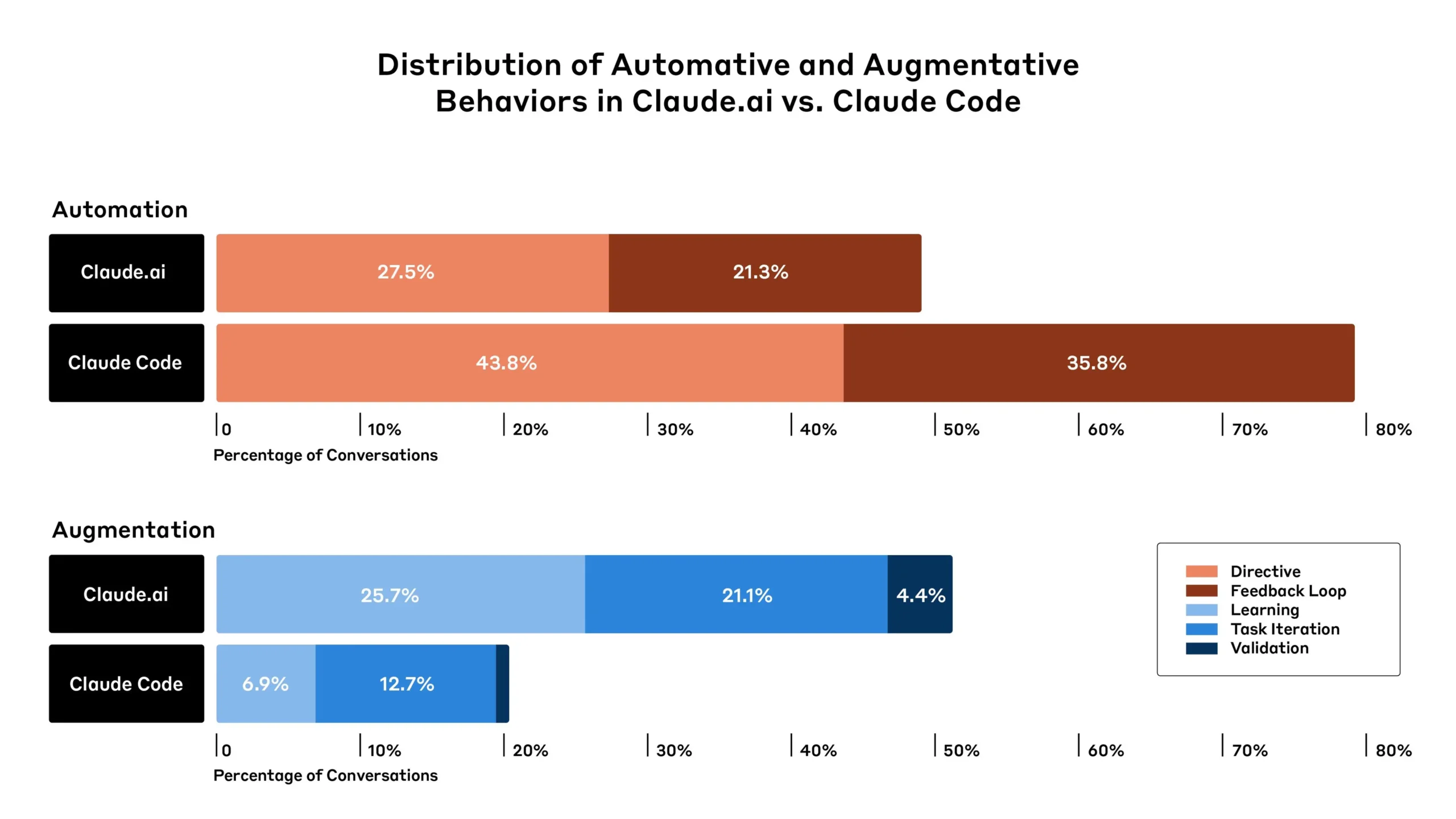

2 — Automation, Not Just Augmentation

On Claude Code, 79 % of sessions culminated in the agent finishing the task autonomously—writing production code, shipping a script, closing the loop. The generic chatbot managed that feat in 49 % of cases.

This is the difference between AI as a search engine with swagger and AI as an execution layer. The former speeds up the thinking; the latter collapses the doing.

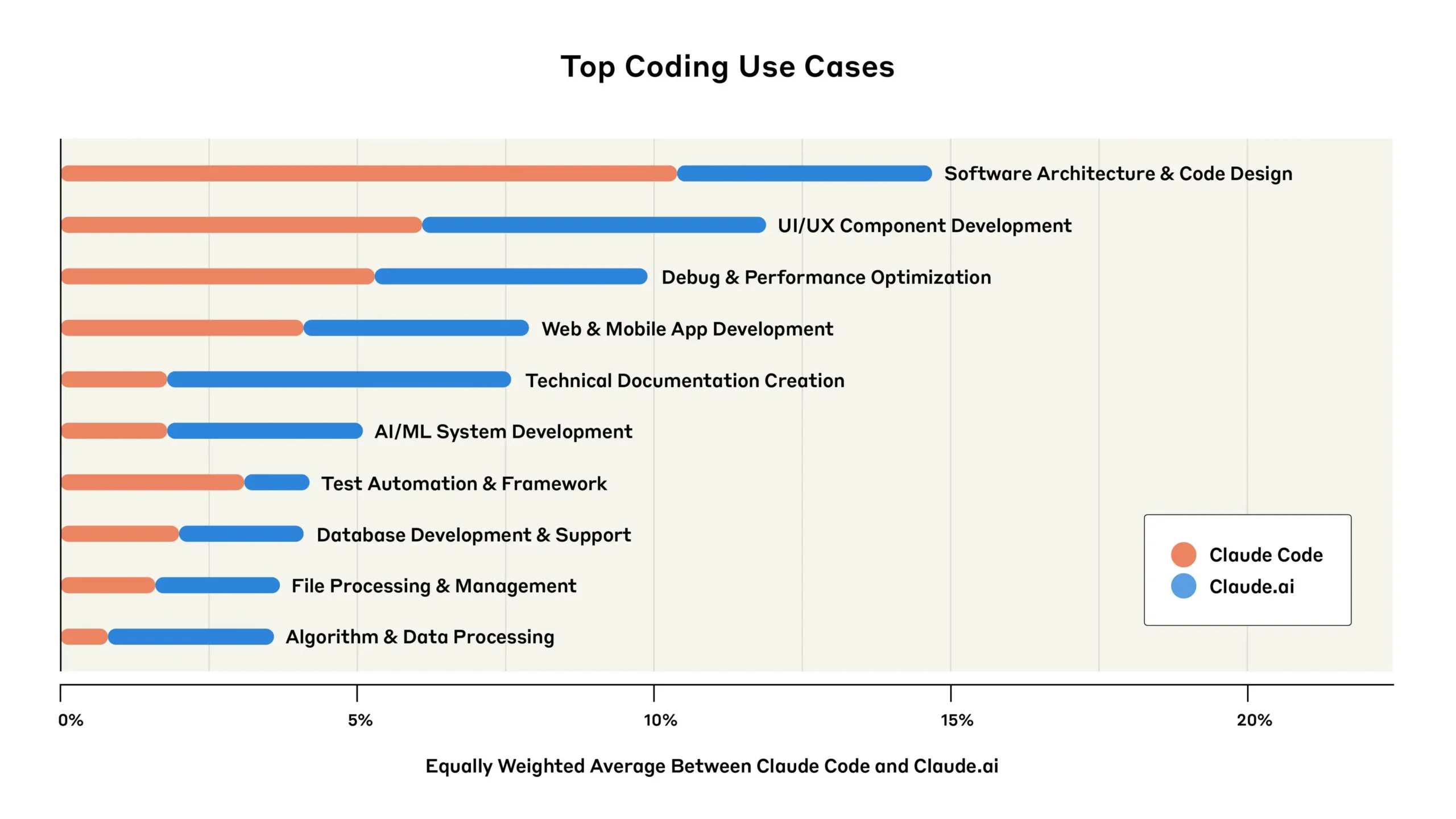

3 — Building the "Front Door" Faster

Which tasks go agent‑first the soonest? Anything user‑facing. JavaScript, HTML and CSS dominate the language mix; UI component scaffolding tops the task list. Anthropic engineers nick‑named the phenomenon "vibe coding": spinning up a polished interface in hours, then iterating live with customers.

For startups, the front door is the product. Shaving days off the design‑build loop is existential.

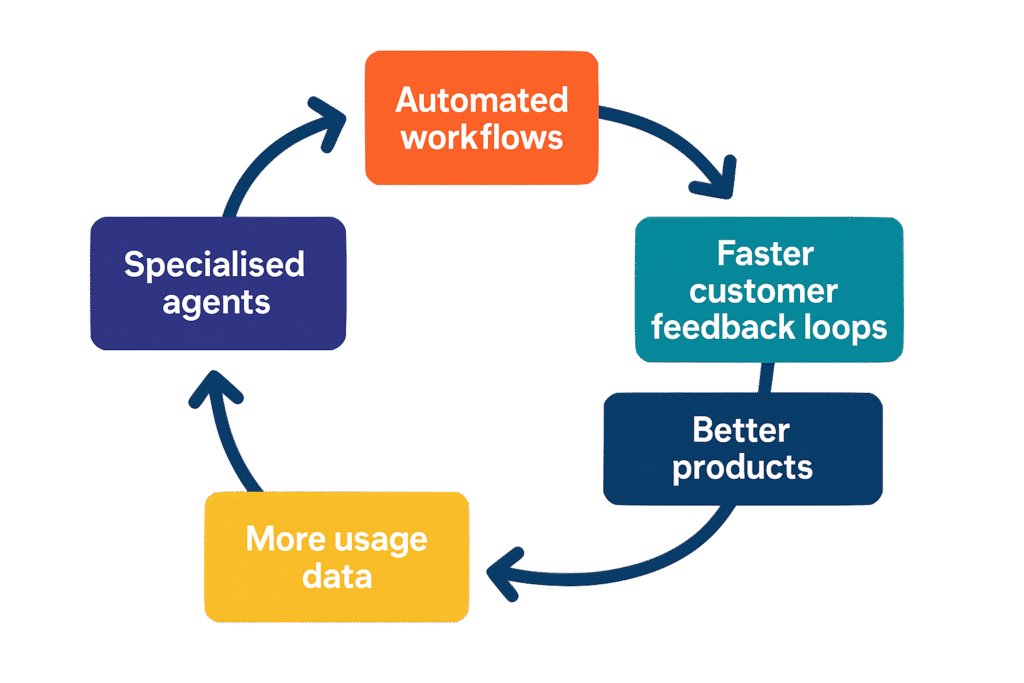

The Compounding Economics of Speed

Put the pieces together:

Speed compounds. Every cycle completed ahead of the competition widens the moat—not linearly but exponentially, the way compound interest does. The late adopters still own the same number of engineers on paper; they just ship half as often and learn half as fast.

We’ve seen this movie before:

- Electrification unlocked the continuous‑flow factory, crushing steam‑powered rivals.

- The internet birthed pure‑play e‑commerce before catalogue incumbents finished wiring their warehouses.

- Smartphones enabled on‑demand services that never fit the desktop paradigm.

In each case, technology was the spark; organisational redesign was the fuel.

Coding Is Only the Canary

If a code‑writing agent can lop hours off a sprint, imagine domain‑specific agents for:

- Marketing: autonomous A/B testing and media‑buying

- Sales: personalised outbound that rewrites itself per prospect

- Legal: real‑time contract red‑lining

- Research: nightly literature sweeps summarised by breakfast

Expect the same adoption curve: startups sprint, enterprises jog—and the speed divide widens function by function.

Playbooks for the Two Camps

Startups

- Embed, don’t bolt‑on. Put the agent where the work happens: the IDE, the CRM, the pull request.

- Instrument cycle time. Measure idea‑to‑iteration in minutes, not story‑points.

- Refactor org charts. Treat agents as teammates; design processes around human‑AI hand‑offs.

Enterprises

- Carve out sandboxes. Small cross‑functional pods with licence to break process.

- Fast‑track revenue workflows. Customer‑facing loops pay for the rest of the transformation.

- Budget for refactoring, not just licences. The software bill is trivial next to the change‑management bill.

Choose Your Lane

The question is no longer if AI will reshape work. It’s how many learning cycles you can afford to surrender to faster rivals before the gap becomes unbridgeable.

Speed is about to become a balance‑sheet item. Which side of the divide will you be on?